Loading content...

Published on

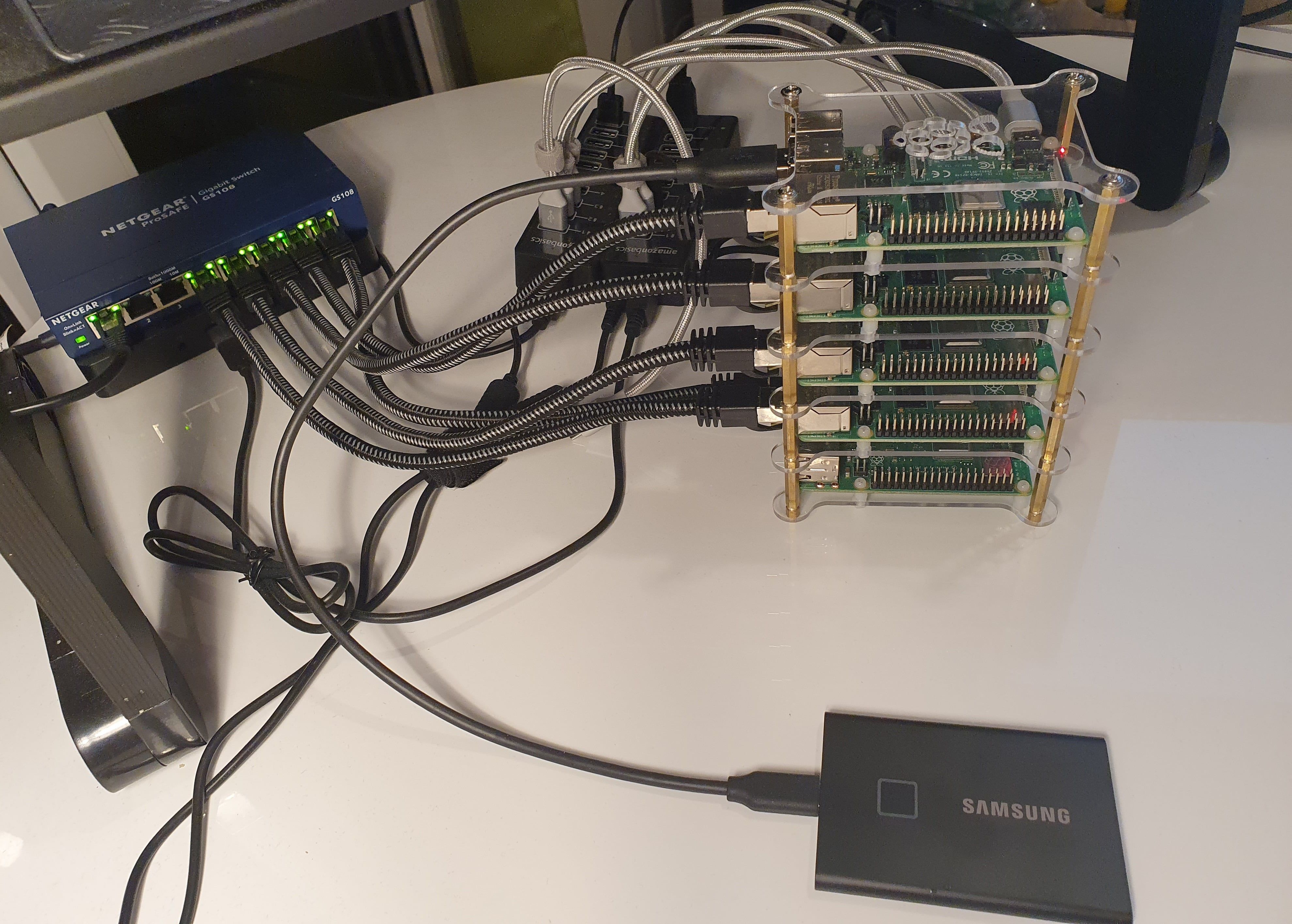

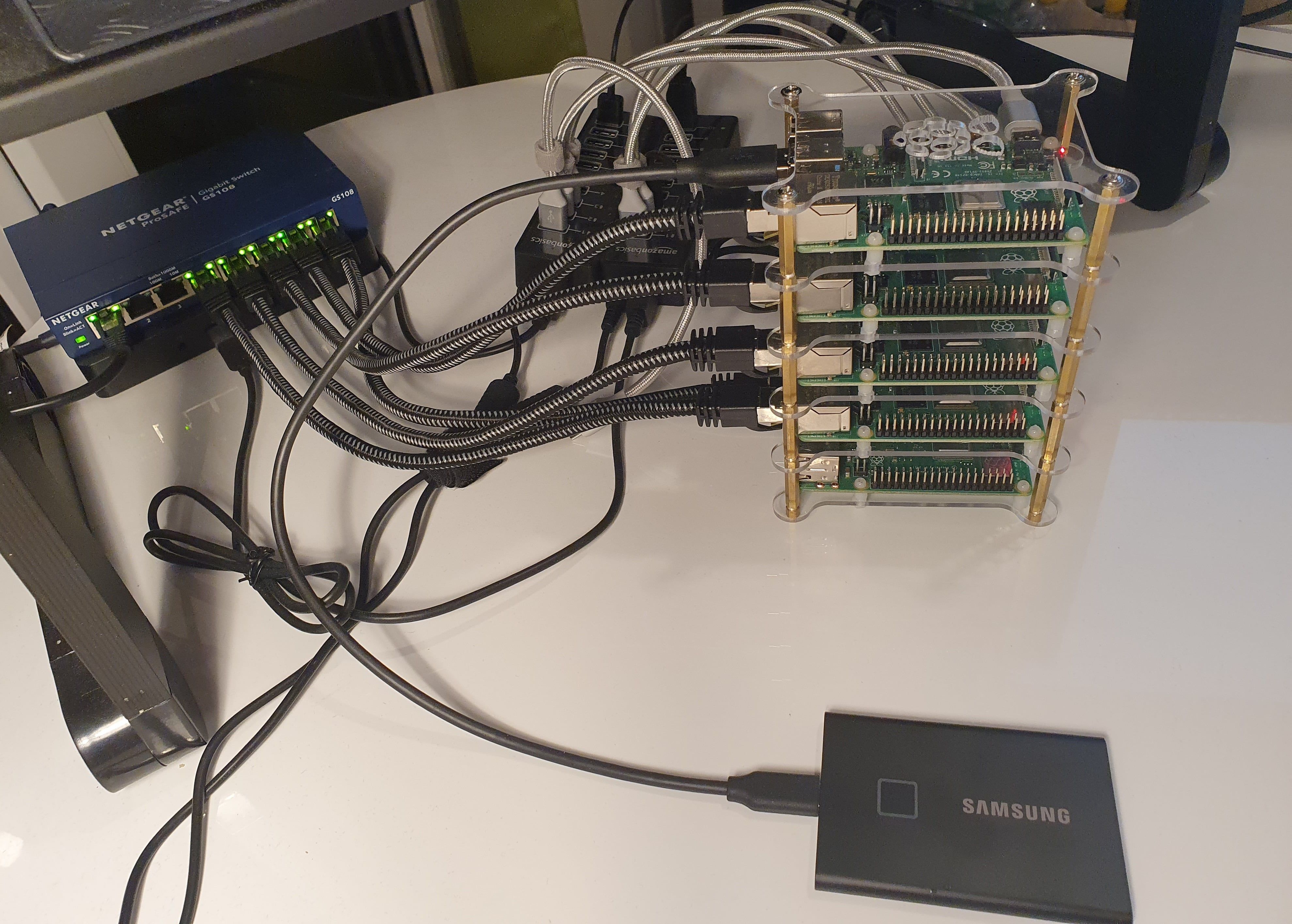

Build your own Kubernetes Cluster with K3S

Creating your own kubernetes cluster on raspberry pi doesn't take much effort and it was a fun experience...

Published on

Creating your own kubernetes cluster on raspberry pi doesn't take much effort and it was a fun experience...